Multithreading refers to running multiple independent execution paths (threads) simultaneously in a program (process), where each thread can process different tasks in parallel or concurrently, thereby improving program execution efficiency and resource utilization. Here is a detailed analysis of multithreading:

I. Core Concepts

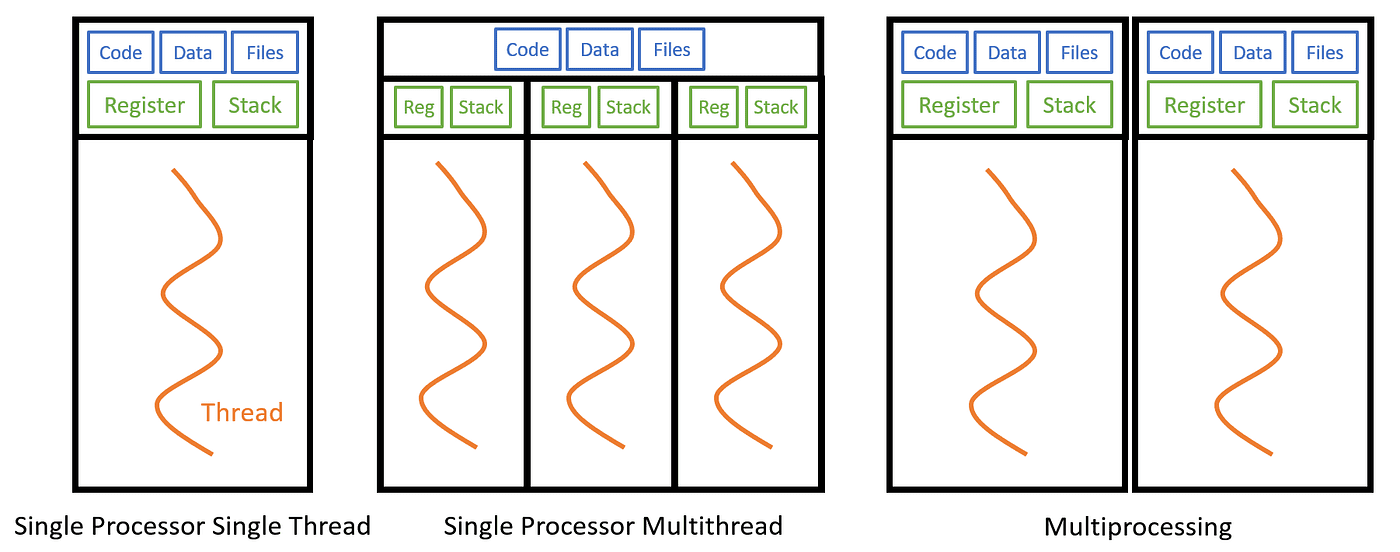

Process vs. Thread

- Process: The basic unit for the operating system to allocate resources (such as memory, file handles, etc.). Each process contains at least one main thread.

- Thread: The smallest execution unit within a process, sharing the process’s resources (such as memory space, global variables) but having independent execution stacks and register states.

- Relationship: A process can contain multiple threads, which run within the process’s resources. The cost of thread switching is lower than that of processes.

Goals of Multithreading

- Improve efficiency: Utilize multi-core CPUs for parallel task processing (e.g., downloading files and playing music simultaneously).

- Enhance user experience: Avoid program unresponsiveness caused by single-thread blocking (e.g., in GUI programs, the main thread handles the interface, while sub-threads handle data loading).

- Resource sharing: Threads share process memory, eliminating the need for complex IPC (Inter-Process Communication) mechanisms.

II. Key Characteristics of Multithreading

Parallelism vs. Concurrency

- Parallelism: Multiple threads execute simultaneously on multi-core CPUs (truly “running at the same time”).

- Concurrency: Multiple threads alternate execution on a single-core CPU through time-slice rotation (macroscopically “running at the same time,” microscopically serial).

Shared Resources and Race Conditions

- Shared resources: Threads share the process’s memory space, such as global variables and object instances.

- Race Condition: When multiple threads access or modify shared resources simultaneously, the result may be unpredictable (e.g., counter increment operations).

- Solutions:

- Synchronization mechanisms: Use locks, semaphores, atomic variables, etc., to ensure only one thread accesses resources at a time.

- Thread-safe classes: Use thread-safe containers from standard libraries (e.g., Java’s ConcurrentHashMap).

Thread Lifecycle

- New: A thread object is created but not started.

- Runnable: After calling the start() method, waiting for CPU scheduling.

- Running: Acquires CPU resources and executes the run() method.

- Blocked: Paused due to waiting for locks, I/O operations, etc.

- Terminated: The thread finishes execution or terminates abnormally.

III. Implementation Methods of Multithreading

Different programming languages and frameworks provide various ways to create threads. Here are common approaches:

1. Inheriting the Thread Class (Basic Method)

Core idea: Create a class that inherits the Thread class, override the run() method to define the thread task, and start the thread via the start() method.

Code example:

public class MyThread extends Thread {

// Override the run() method to define thread execution logic

@Override

public void run() {

System.out.println("Thread " + getName() + " is running");

}

public static void main(String[] args) {

MyThread thread = new MyThread();

thread.setName("Custom Thread"); // Set thread name

thread.start(); // Start the thread (do not directly call run())

}

}Features:

- Advantages: Simple and intuitive, with direct operation of thread objects.

- Disadvantages: Java does not support multiple inheritance of classes, limiting subclass extensibility; high coupling between code and threads, 不利于资源共享.

- Applicable scenarios: Simple independent thread tasks without the need for state sharing.

2. Implementing the Runnable Interface (Recommended Method)

Core idea: Create a class that implements the Runnable interface, override the run() method to define the task, and pass the class instance to the Thread constructor to start the thread.

Code example:

public class MyRunnable implements Runnable {

@Override

public void run() {

System.out.println("Thread " + Thread.currentThread().getName() + " is running");

}

public static void main(String[] args) {

Runnable task = new MyRunnable(); // Create task object

Thread thread = new Thread(task); // Bind task to thread

thread.setName("Runnable Thread");

thread.start();

}

}Features:

- Advantages: Avoids the limitation of single inheritance; supports resource sharing (multiple threads can share the same Runnable instance); decouples “threads” from “tasks”.

- Disadvantages: Requires explicit combination with the Thread class, with slightly more complex code.

- Applicable scenarios: Scenarios where multiple threads share resources (e.g., counters, caches) or need to inherit other classes.

3. Implementing the Callable Interface (Threads with Return Values)

Core idea: Implement thread tasks with return values using the Callable interface and Future mechanism, which must be used with the ExecutorService thread pool.

Code example:

import java.util.concurrent.*;

public class MyCallable implements Callable<String> { // Generic specifies return type

@Override

public String call() throws Exception { // Can throw exceptions and support return values

Thread.sleep(1000); // Simulate time-consuming task

return "Task completed, current time: " + System.currentTimeMillis();

}

public static void main(String[] args) throws ExecutionException, InterruptedException {

ExecutorService executor = Executors.newSingleThreadExecutor(); // Create thread pool

Callable<String> task = new MyCallable();

Future<String> future = executor.submit(task); // Submit task and get Future object

// Get thread execution result (can block waiting or set timeout)

String result = future.get(); // Block until task completion

System.out.println("Thread return result: " + result);

executor.shutdown(); // Close the thread pool

}

}Features:

- Advantages: Supports return of thread execution results and exception handling; manages threads via thread pools to avoid resource waste.

- Disadvantages: Must be used with thread pools, with higher code complexity.

- Applicable scenarios: Scenarios requiring thread execution results or exception handling (e.g., asynchronous computing, task partitioning).

4. Using Thread Pools (ExecutorService Framework)

Core idea: Manage thread pools via the Executor framework to reuse thread objects, avoiding the overhead of frequent thread creation/destruction.

Direct use is not recommended (see “Recommended Thread Pools” below for reasons). Here are four common types:

(I) Four Types of Thread Pools

1. FixedThreadPool (Fixed-Size Thread Pool)

- Creation method:

ExecutorService fixedPool = Executors.newFixedThreadPool(int nThreads);- Core features:

- Core threads = Maximum threads = nThreads; threads are fixed and not destroyed.

- The task queue is an unbounded LinkedBlockingQueue, which may cause OOM (Out Of Memory) when tasks back up.

- Applicable scenarios: Long-running fixed concurrent tasks (e.g., database connection pool threads), but pay attention to queue length limits.

2. CachedThreadPool (Cacheable Thread Pool)

- Creation method:

ExecutorService cachedPool = Executors.newCachedThreadPool();- Core features:

- Core threads = 0; maximum threads = Integer.MAX_VALUE (theoretically unbounded).

- The task queue is a synchronous SynchronousQueue; idle threads survive for 60s and are destroyed when no tasks exist.

- Risks: May create massive threads when submitting a large number of tasks in a short time, leading to CPU exhaustion or OOM.

- Applicable scenarios: A small number of short-term asynchronous tasks (e.g., temporary calculations), not suitable for high-concurrency scenarios.

3. SingleThreadExecutor (Single-Thread Pool)

- Creation method:

ExecutorService singlePool = Executors.newSingleThreadExecutor();- Core features:

- Contains only 1 core thread, ensuring tasks are executed serially in order.

- The task queue is an unbounded LinkedBlockingQueue, with the same OOM risk.

- Applicable scenarios: Tasks requiring sequential execution (e.g., singleton resource operations, database serial writes).

4. ScheduledThreadPool (Scheduled Task Thread Pool)

- Creation method:

ScheduledExecutorService scheduledPool = Executors.newScheduledThreadPool(int corePoolSize);- Core features:

- Core threads are specified by corePoolSize; maximum threads = Integer.MAX_VALUE.

- Supports scheduled tasks (scheduleAtFixedRate) and periodic tasks (scheduleWithFixedDelay).

- Applicable scenarios: Timed tasks (e.g., log flushing, cache expiration cleaning), periodic tasks (e.g., heartbeat detection).

(II) Recommended Thread Pool: Manually Creating ThreadPoolExecutor

Why not recommend Executors?

Thread pools created by Executors use unbounded queues (e.g., LinkedBlockingQueue) or excessively large maximum threads, which may cause:

- Memory overflow: A large number of tasks back up in unbounded queues, exhausting memory.

- Resource exhaustion: CachedThreadPool may create too many threads, leading to CPU saturation or increased thread competition.

Recommended method: Manually construct ThreadPoolExecutor

Customize seven parameters via the ThreadPoolExecutor constructor to precisely control thread pool behavior:

ThreadPoolExecutor executor = new ThreadPoolExecutor(

corePoolSize, // Core thread count

maximumPoolSize, // Maximum thread count

keepAliveTime, // Non-core thread survival time

unit, // Time unit

workQueue, // Task queue

threadFactory, // Thread factory

handler // Rejection policy

);(III) Detailed Explanation of Seven Core Parameters of Thread Pools

- corePoolSize (Core Thread Count)

- Definition: The number of resident core threads in the thread pool, which are not destroyed even with no tasks (unless allowCoreThreadTimeOut is set to true).

- Role:

- When tasks are submitted, core threads are created first to execute tasks until corePoolSize is reached.

- Recommended settings based on task type:

- CPU-bound tasks: Set to CPU cores + 1 (fully utilize CPU, avoid context switching).

- I/O-bound tasks: Set to 2 * CPU cores (threads may block due to I/O, requiring more threads to process tasks).

- maximumPoolSize (Maximum Thread Count)

- Definition: The maximum number of threads allowed in the thread pool (core threads + non-core threads).

- Trigger condition: New threads (non-core threads) are created when the task queue is full and the current running threads < maximumPoolSize.

- Note: Non-core threads are destroyed when their idle time exceeds keepAliveTime. If the task queue is an unbounded queue (e.g., LinkedBlockingQueue), maximumPoolSize is ineffective (the queue never fills, so no non-core threads are created).

- keepAliveTime (Survival Time)

- Definition: The survival time of non-core threads in an idle state; they are destroyed after timeout.

- Scope: When the number of threads > corePoolSize, idle non-core threads are reclaimed after exceeding keepAliveTime. It can be dynamically adjusted via setKeepAliveTime().

- unit (Time Unit)

- Optional values: TimeUnit enumeration (NANOSECONDS, MICROSECONDS, MILLISECONDS, SECONDS, etc.).

- Example: keepAliveTime = 30, unit = TimeUnit.SECONDS means non-core threads survive for up to 30 seconds.

- workQueue (Task Queue)

- Definition: A queue for storing pending tasks, with three types:

- Direct submission queue: e.g., SynchronousQueue, with no storage space; tasks are submitted directly to threads, and new threads are created when the queue is full (used in CachedThreadPool).

- Bounded queue: e.g., ArrayBlockingQueue (with specified capacity); triggers rejection policies when full (recommended to avoid OOM).

- Unbounded queue: e.g., LinkedBlockingQueue (default capacity Integer.MAX_VALUE), which may cause memory overflow (used by default in Executors, not recommended).

- Recommendation: For high-concurrency scenarios, prefer bounded queues (e.g., ArrayBlockingQueue(1000)), combined with reasonable maximumPoolSize and rejection policies.

- threadFactory (Thread Factory)

- Role: A factory for creating threads, which can customize thread names, priorities, and whether they are daemon threads.

- Best practice: Name threads using ThreadFactoryBuilder (e.g., from the Guava library) for easier troubleshooting:

ThreadFactory namedFactory = new ThreadFactoryBuilder()

.setNameFormat("my-thread-pool-%d").build();- handler (Rejection Policy)

- Trigger condition: When the task queue is full and the number of threads reaches maximumPoolSize, new tasks are rejected and handled via rejection policies.

- Four built-in rejection policies:

- AbortPolicy (default): Directly throws RejectedExecutionException, requiring the caller to catch and handle it.

- CallerRunsPolicy: The main thread submitting the task executes the task (slows down submission speed, suitable for non-asynchronous scenarios).

- DiscardPolicy: Silently discards unprocessable tasks (may lose data, use with caution).

- DiscardOldestPolicy: Discards the oldest task in the queue and tries to submit the new task (risk of data loss).

- Custom policies: Implement the RejectedExecutionHandler interface to define custom logic (e.g., logging, writing to the database for retries).

(IV) Best Practices for Thread Pool Parameter Configuration

- Avoid unbounded queues: Prefer bounded queues (e.g., ArrayBlockingQueue) to prevent memory overflow.

- Set core thread count reasonably:

- Get CPU cores via Runtime.getRuntime().availableProcessors().

- CPU-bound tasks: corePoolSize = CPU cores + 1.

- I/O-bound tasks: corePoolSize = 2 * CPU cores (or determined via pressure testing).

- Choose appropriate rejection policies:

- Critical business: Use AbortPolicy with exception capture, logging, or administrator notifications.

- Non-critical business: Use DiscardPolicy or custom retry logic.

- Monitor thread pool status: Monitor queue length and active threads via methods like getQueue().size() and getActiveCount(), and adjust parameters dynamically.

(V) Summary

| Thread Pool Type | Core Scenarios | Recommended? | Risk Tips |

| FixedThreadPool | Fixed concurrent tasks (e.g., database connections) | No | Unbounded queue may cause OOM |

| CachedThreadPool | Few short-term tasks (e.g., temporary calculations) | No | May create massive threads, exhausting CPU |

| SingleThreadExecutor | Sequential tasks (e.g., singleton resource operations) | No | Unbounded queue risk |

| ScheduledThreadPool | Timed/periodic tasks (e.g., cache cleaning) | No | Pay attention to maximum thread limits |

| Manually created ThreadPoolExecutor | All production scenarios (custom parameters) | Yes | Need precise configuration based on business |

Core principle: There is no “silver bullet” for thread pool configuration. It requires comprehensive tuning based on business characteristics (task type, concurrency, data importance) and hardware resources (CPU, memory). Prioritize bounded queues and reasonable rejection policies to avoid system crashes.

IV. Advantages and Disadvantages of Multithreading

Advantages

- Improves CPU utilization, suitable for I/O-bound tasks (e.g., network requests, file reading/writing).

- Enhances program response speed, avoiding main thread blocking.

- Simplifies the programming model by splitting complex tasks into threads.

Disadvantages

- Complex thread safety issues, requiring additional handling of shared resource competition.

- Context switching overhead due to thread switching (especially with excessive threads).

- Difficult debugging and testing, with hard-to-reproduce occasional race conditions.

V. Applicable Scenarios

- I/O-bound tasks: Web crawlers (multiple threads downloading web pages simultaneously), file processing (multi-threaded reading/writing of different files).

- Real-time interactive programs: GUI applications (main thread handles the interface, sub-threads handle data loading).

- Task splitting: Splitting complex calculations into sub-tasks for parallel processing (e.g., video rendering, big data analysis).

- Server applications: Multi-threaded handling of client requests (e.g., thread pool model of web servers).

VI. Notes

- Avoid excessive use of threads: Too many threads lead to high memory usage and frequent context switching, reducing performance.

- Thread-safe design: Operations on shared resources must be locked or use atomic operations to ensure data consistency.

- Use thread pools reasonably: Reuse threads via thread pools to avoid the overhead of frequent thread creation/destruction (e.g., Java’s ExecutorService, Python’s concurrent.futures).

- Handle thread blocking: Avoid long-term thread blocking (e.g., deadlocks, unlimited waiting) and set timeout mechanisms.